The Great AI Trade: Energy

Betting on the energy supercycle

Hi there, this is our 60,000-subscriber special. It took us more than a month to research and put this together. Hope you enjoy (and no paywall on this one!)

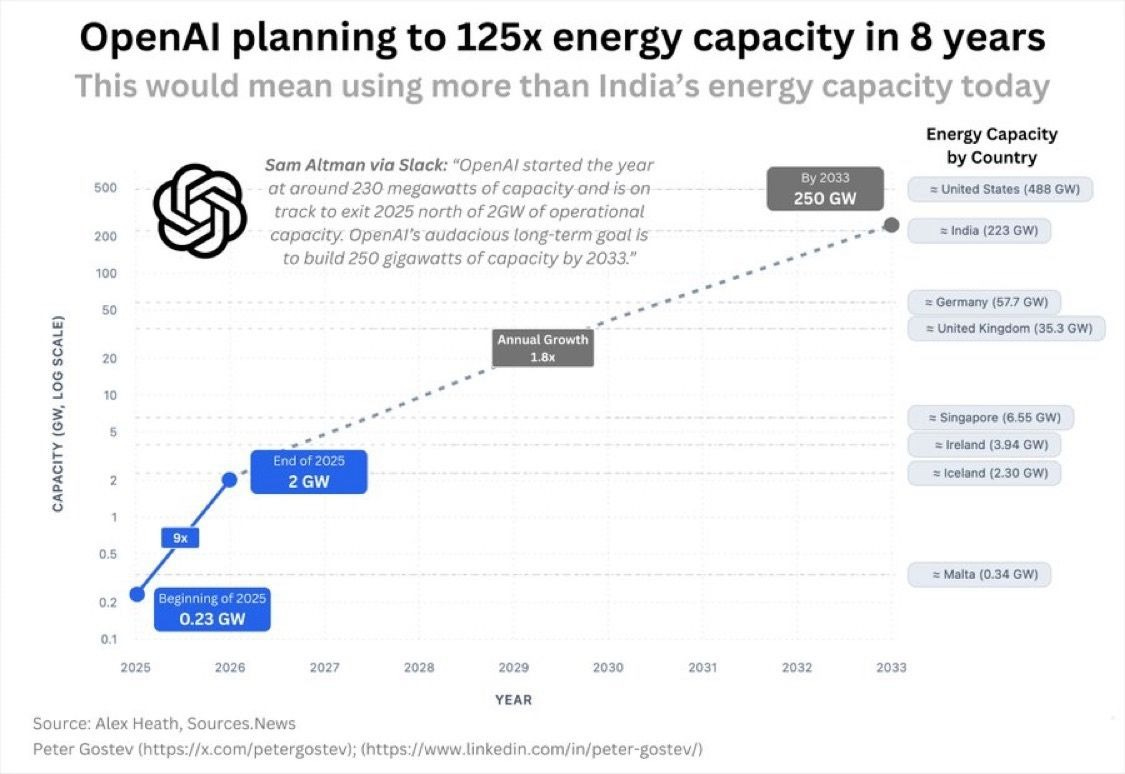

OpenAI wants to build 250 GW of compute capacity by 2033. This is more than India’s entire peak power demand in 2025. To put that into perspective, a corporation with fewer than 10,000 employees aims to consume more power in less than a decade than the entire consumption of nearly 300 million households in the world’s 4th-largest economy.

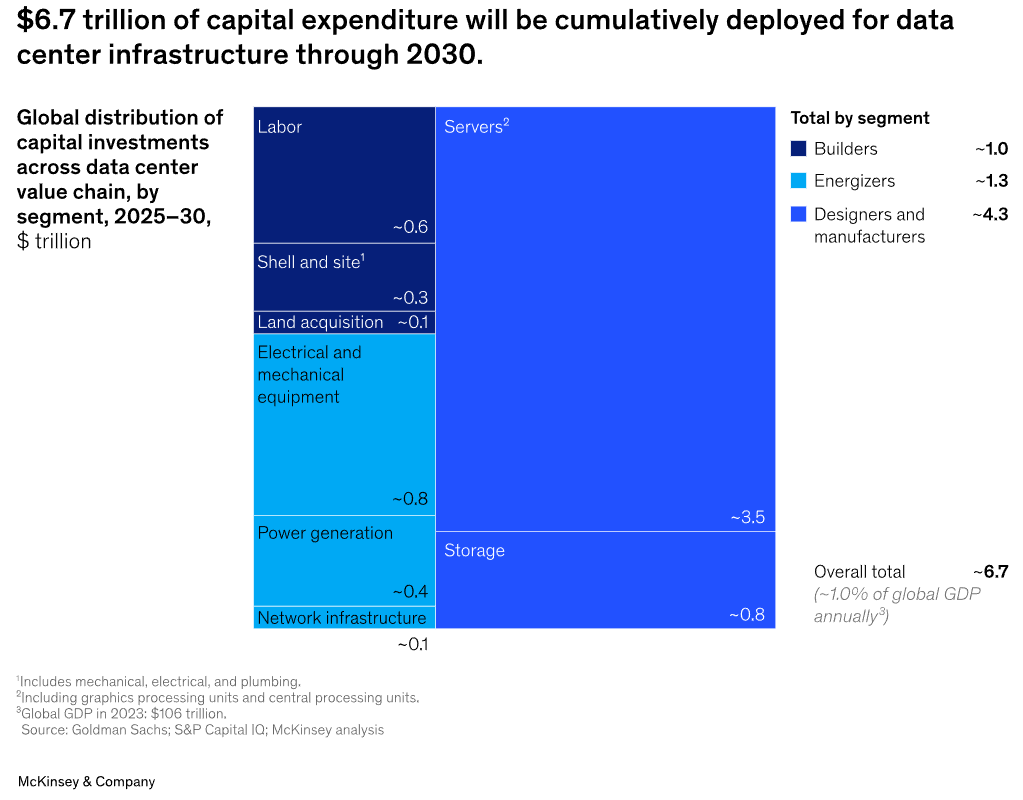

Computing capacity lies at the bedrock of the AI economy. According to McKinsey, nearly USD 7 trillion will flow into data centers globally by 2030 to power computing, with more than 40 percent of that going to the US. While such investment has the potential to drive massive economic growth, it isn’t without challenges.

Not long ago, the choke point in building AI compute capacity was GPU production; GPUs, or graphics processing units, are specialized chips that perform thousands of calculations in parallel, making them ideal for training and running AI models. However, if Mark Zuckerberg is to be believed (and I’m sure he’d know a thing or two about this), that bottleneck has recently receded, making way for another — energy.

Intelligence at scale now depends on physical infrastructure: turbines, reactors, transmission lines. The real race is no longer just about training larger models; it’s also about generating enough power to keep them running.

Why AI=Energy

AI may look like software, but under the hood, it behaves not much unlike heavy industry. Every model, from GPT to Gemini, runs on compute clusters that consume power at the scale of small cities. Training a frontier model today can require tens of megawatts for weeks — and inference, the process of serving those models to users, runs indefinitely. Winning in the AI race today requires access to energy at levels previously unimaginable.

Understanding AI’s power appetite

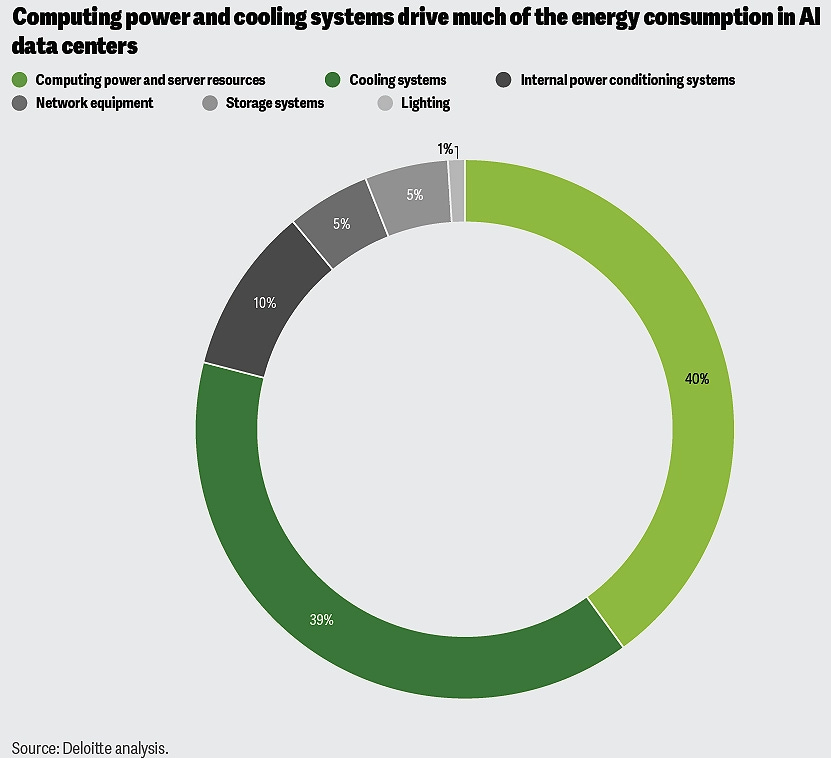

Two broad areas drive most of the electricity consumption in a data center: computing power and cooling. Computing and server systems account for roughly 40% of a data center’s energy draw.

Cooling systems take almost as much, consuming another 38–40% to keep the servers from melting under the heat of nonstop computation.

At the heart of computing lie two critical stages that shape power demand.

Training: Before you can ask an AI model to plan your trip or generate a video, it must first learn. Think of it as teaching a supercomputer how to think by feeding it mountains of data—books, code, conversations, images—until it starts recognizing patterns and relationships. During this phase, tens of thousands of GPUs work for weeks or months, running trillions of calculations. It’s an electricity-hungry marathon. For instance, training OpenAI’s GPT-4 alone is estimated to have cost over USD 100 million and consumed 50 GWh of power, enough to keep San Francisco lit for three days.

Inference: Once trained, this is when the model starts working for you. Every question you ask ChatGPT, every AI-generated image, every Copilot suggestion triggers thousands of micro-computations inside massive data centers worldwide. By reasonable estimates, the average energy demand per ChatGPT query is 10 times that of a standard Google search. The electricity footprint, instead of dropping after the intense training compute, shifts into a constant draw serving billions of queries every day.

And all this relentless computing generates heat, which has to go somewhere. That’s where cooling becomes the invisible yet massive energy sink. Each GPU rack can generate enormous amounts of heat, and maintaining stable temperatures can consume nearly as much energy as the compute itself. As chip density increases, so does the need for more advanced, energy-intensive cooling systems.

In essence, AI’s energy appetite is a loop — computation generates heat, and cooling consumes even more power to tame it. Every new leap in intelligence, therefore, demands a matching leap in energy supply.

The Gigawatt Arms Race

Let’s go back to the beginning, when we discussed OpenAI’s plans to create 250 GW of computing capacity by 2033. To many, it may appear audacious; to the rest, even absurd. But one glance at its growth curve tells a different story.

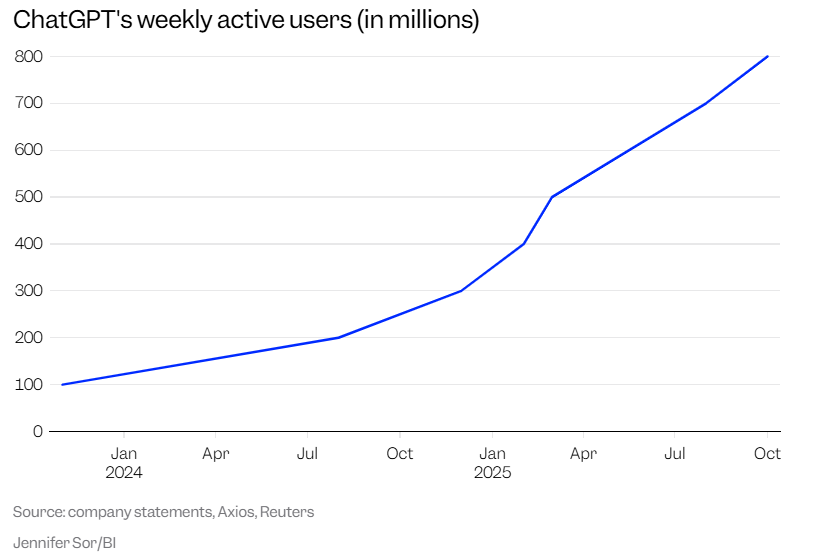

ChatGPT now has over 800 million weekly active users — that’s nearly one-tenth of humanity engaging with a product that didn’t exist three years ago.

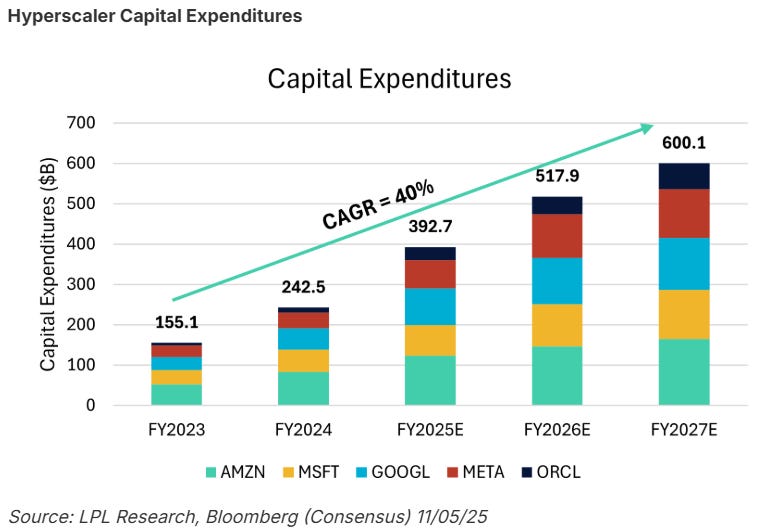

And OpenAI isn’t alone in recognizing this. Every major hyperscaler — Amazon, Microsoft, Google, Meta — knows that this isn’t a race they’d want to lose. And this has started showing in their capex, which is now nearly 60 percent of their cumulative operating cash flow.

The Economics of Urgency

AI may well reshape everything from how we search to how we work — but what’s the rush? Why are tech giants racing to spend hundreds of billions on data centers right now?

The answer lies in two powerful dynamics.

Network effects are what make a product more valuable as more people use it. Let’s take the example of a messaging app. It’s not particularly useful if it has only a few users, but it may quickly become indispensable if it has many. The same rules also apply to AI. Every new user punching queries, every new startup building tools on top of APIs, every new enterprise training models on their own data feeds information back into the ecosystem. Eventually, these effects form powerful feedback loops that are nearly impossible for competitors to replicate.

Operating leverage, on the other hand, is about turning that scale into profit. AI models are expensive to build and train, but once they’re ready, serving additional users costs very little. After a point, each extra query or customer adds revenue without meaningfully increasing expense. It’s a setup where heavy upfront investment eventually drives exponential returns.

In essence, scaling early in AI isn’t merely a choice. It may be what allows these players to build enduring moats in a space that has become brutally competitive.

America’s Grid Meets the Data Center Boom

Data centers already account for over 4% of the US electricity demand, and that share is climbing fast. Over the past five years, total US power generation capacity has grown at roughly 2% per year, but data center demand, on the other hand, is projected to grow 15–20% annually through the decade — an order of magnitude faster than the grid itself.

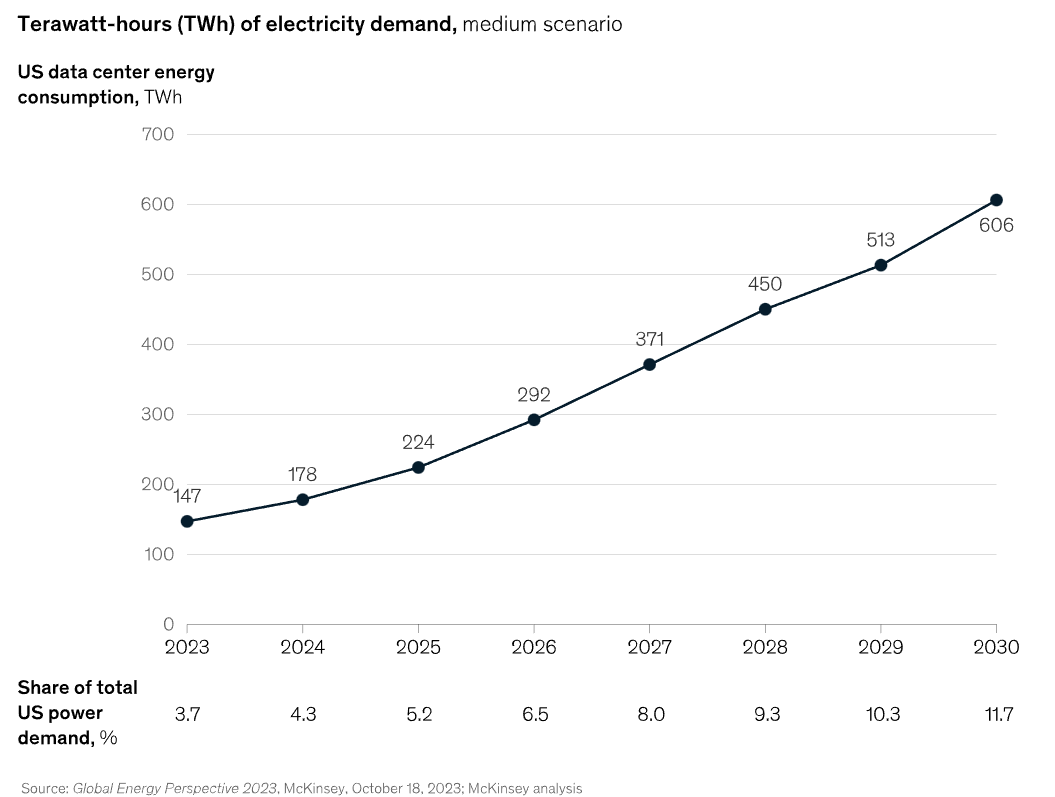

According to McKinsey, US data centers consumed around 200 terawatt-hours (TWh) of electricity in 2024 — roughly equivalent to the annual consumption of a country like Thailand. By 2030, that number could exceed 600 TWh, or nearly 12% of all U.S. power demand, driven almost entirely by AI workloads.

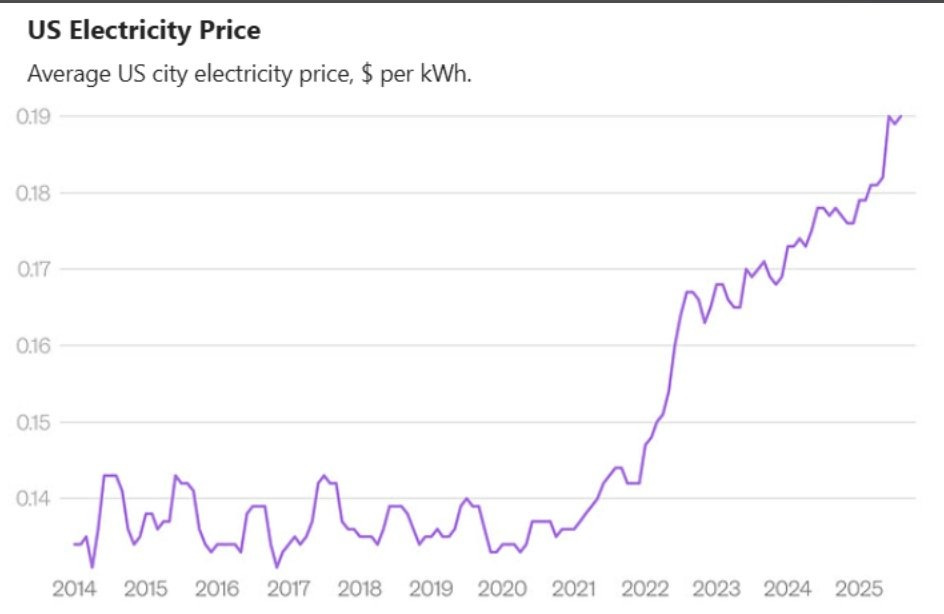

Given the recent surge in AI-led power demand, the strain is already visible in regions with dense AI data center clusters, which have seen significant electricity price increases as utilities scramble to meet the new load.

Unless the grid expands much faster than history suggests or the data center build-out slows down, which is currently showing no signs of relenting, the simple economics of scarcity imply that power prices will keep rising as users compete for every available megawatt.

The Limits of Building Your Own Generation

The first question that comes to mind is obvious — if hyperscalers are already pouring in tens of billions of dollars into new data centers, and energy is now the biggest bottleneck, why not just build their own generation capacity?

As a matter of fact, most are.

Microsoft and Constellation Energy have partnered to restart part of the Three Mile Island nuclear plant in Pennsylvania — a first-of-its-kind nuclear revival to supply long-term power for data centers. Google, meanwhile, is working with Kairos Power to build a 50 MW small modular reactor in Tennessee, expected to supply its southeastern US data centers. Likewise, other tech giants are following suit, exploring partnerships to build or source dedicated generation capacity.

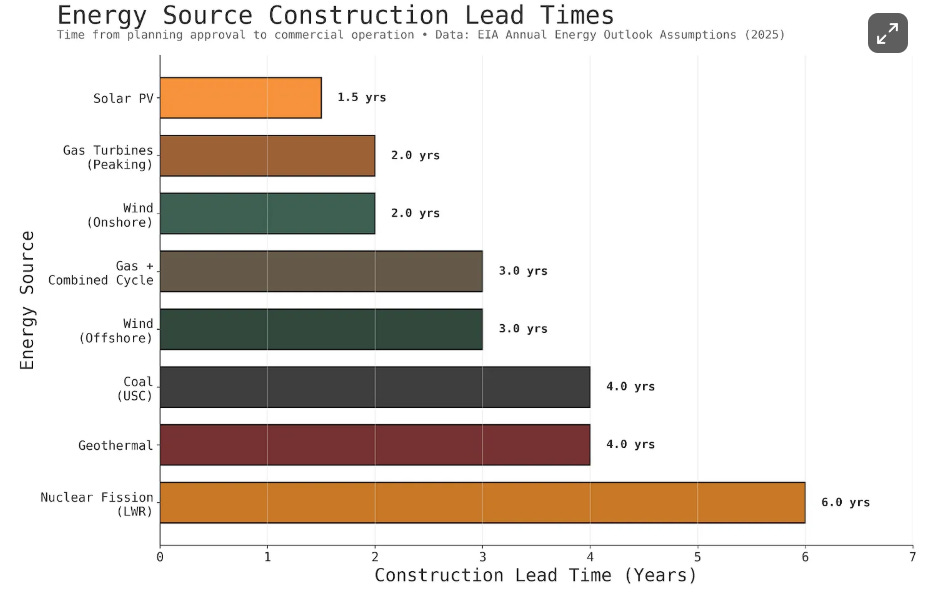

However, most of these projects stretch well into the 2030s — and that’s precisely the problem. While a new GW+ data center can go online in 12–24 months, the lead times on new power sources are far longer.

Even solar, which has one of the shortest construction timelines, faces intermittency and curtailment issues — the sun doesn’t always shine when servers need to run. Batteries help, but storage adds cost and complexity.

Then there’s the matter of capital and capability. Locking up billions in long-duration generation projects isn’t necessarily the best use of cash for companies whose core advantage lies in software and services.

Finally, consider the economics of chips. These are the single largest cost component of an AI data center. And while a facility may depreciate over 15 years, GPUs and AI accelerators lose value in 3–5 years. Every month, these chips sit idle because of power constraints, which translates into millions of dollars of lost value.

So yes — the hyperscalers need more energy, and they are working to build it. But more than anything, they need it now. And given the realities of time, cost, and grid dependence, going completely off-grid simply isn’t an option.

The Long and Short of it

AI needs energy. A lot of it. And more importantly, it needs it now.

Data center power demand in the US is rising at a pace the grid simply wasn’t built for — projected to grow nearly ten times faster than historical generation capacity growth. The result is already visible in the data: regional electricity prices are climbing, utilities are straining to meet new loads, and hyperscalers are scrambling to lock in long-term supply.

Sure, the hyperscalers are investing billions in their own generation projects. But these are expected to take years to come online, while AI buildouts are happening today. That imbalance — between how fast the grid can expand and how quickly AI infrastructure is scaling — is at the heart of this potential supercycle.

In this race for AI dominance, the real beneficiaries may not just be the chipmakers or the cloud players, but also the utilities — the only entities currently capable of delivering electricity at scale and on time.

Building an AI Energy Portfolio

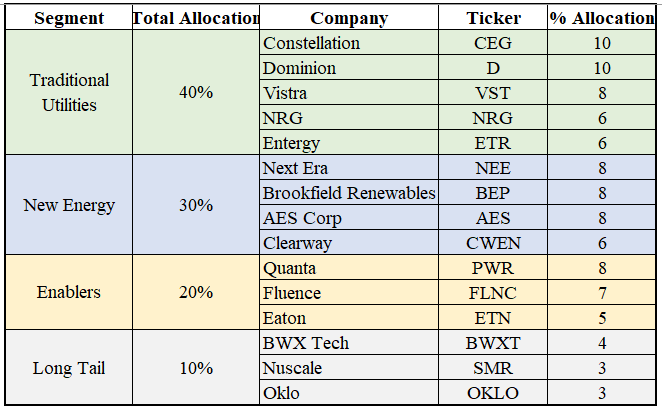

We split our portfolio into four segments, each uniquely positioned to benefit from a different aspect of AI’s accelerating power demand.

Traditional Utilities: Power producers with deep generation assets — gas, coal, and nuclear — now witnessing surging demand as AI data centers strain existing grids.

New Energy Plays: Renewable players rapidly expanding solar, wind, and hybrid capacity to meet hyperscaler demand.

Enablers: Companies building and upgrading transmission networks, substations, and grid storage to support rising demand and buildout.

Long-Tail Opportunities: Early-stage innovators and experimental plays with the potential to redefine the power sector.

The portfolio performance makes the energy growth story unmistakably clear. Since the launch of ChatGPT, our AI energy portfolio has compounded at nearly double (42% CAGR) that of the S&P 500.

Let’s dig a bit deeper into the portfolio:

Traditional Utilities | 40%

The thesis here is straightforward — traditional utilities are the only entities with grid-scale capacity available today to meet the surging AI-driven energy demand. Until the new generation and grid infrastructure catch up, these incumbents are structurally positioned to absorb the growing AI-driven load, making them the logical anchor for the portfolio.

Constellation Energy ($CEG) - 10%

The largest nuclear operator in the US can provide a highly reliable baseload, which hyperscalers prefer for their AI workloads. It has been at the forefront of Big Tech’s shift toward firm clean power, signing landmark energy agreements with both Microsoft and Meta. Its planned acquisition of Calpine would expand its reach into Texas and California — two of the fastest-growing, power-constrained data-center markets.Dominion Energy ($D) - 10%

The world’s biggest data center-serving electric utility has connected about 450 data centers in northern Virginia’s “Data Center Alley,” the largest collection of data centers in the world. The company recently raised its 5-year capital expenditure plan from USD 43.2 bn to USD 50.1 bn for 2025-2029, driven by accelerating data center demand.Vistra Energy ($VST) - 8%

Operates a 41 GW fleet spanning nuclear, gas, coal, and utility-scale batteries, offering flexible, dispatchable capacity needed in AI-heavy regions like Texas and the mid-Atlantic. Has aggressively expanded, recently acquiring seven gas plants totaling 2,600 MW.NRG Energy ($NRG) - 6%

One of the largest competitive power producers in the US, its portfolio is heavily weighted toward gas and is concentrated in Texas, the epicenter of AI-driven load growth.Entergy ($ETR) - 6%

With a generation mix anchored in fossil and nuclear, it is one of the largest players in the US South - one of the country’s fastest growing data center markets.

New Energy | 30%

The fastest-growing segment is developers building renewables and hybrid assets, which are benefiting from record hyperscaler PPAs (Power Purchase Agreements) and fast deployment cycles. While these currently face issues of supply volatility and intermittency, these players stand to benefit in the long run as generation costs drop and storage technology matures.

NextEra ($NEE) - 8%

As the world’s leading renewables player, it’s positioned to benefit from surging AI-driven demand for clean energy directly. The company expects the need for new renewable capacity to triple over the next seven years, mainly driven by data centers.Brookfield Renewables ($BEP) - 8%

Owns and operates one of the largest renewable energy portfolios in the world. Its landmark 10.5 GW partnership with Microsoft underscores its position as a go-to provider of utility-scale clean power. With a development and operating footprint spanning North America, Europe, Asia, and Latin America, it offers rare global exposure to the next wave of hyperscaler-driven renewable energy demand.AES Corp ($AES) - 8%

A leader in clean energy, operating roughly 17 GW of renewable capacity across solar, wind, and hydro, is currently in contract with 5 out of the Mag 7 companies.Clearway Energy ($CWEN) - 6%

Operates 9 GW of renewable capacity and has already executed 1.8 GW of PPAs for supporting data-center demand. Currently building capacity aimed at serving GW-grade co-located data centers across five US states.

Enablers | 20%

This segment captures the picks-and-shovels infrastructure that utilities, developers, and data centers depend on, giving exposure to the entire build-out cycle.

Quanta Services ($PWR) - 8%

Builds high-voltage transmission, substations, and grid-hardening infrastructure that the energy stack depends on. Currently sitting on a record backlog of USD 39.2 bn.Fluence Energy ($FLNC) - 7%

Provides utility-scale battery systems that address the intermittency associated with wind and solar energy, ensuring a reliable supply necessary for AI workloads.Eaton Corporation ($ETN) - 5%

Leading provider of equipment and systems that physically enable data centers to draw, distribute, and condition massive loads. Recently partnered with NVIDIA to design specialised electrical systems for scalable high-density GPU deployments.

Long Tail Opportunities | 10%

This bucket captures early-stage or experimental technologies, from small modular reactors to advanced fission systems, that, if successful, may potentially transform the segment and offer asymmetric upside.

BWX Technologies ($BWXT) - 4%

Primary supplier of nuclear reactor components and technologies to the US government.Nuscale Power ($SMR) - 3%

Leading small modular reactors (SMR) developer with the only US Nuclear Regulatory Commission (NRC) approved modular reactor design.Oklo ($OKLO) - 3%

Backed by Sam Altman, it’s designing next-generation fission-based microreactors to provide round-the-clock power for data centers and other high-demand users.

I think most people truly miss the scale at which companies are investing in AI data centers. Just this year alone, we have spent twice as much as we spent to build the entire U.S. interstate highway system.

As we were wrapping up this newsletter, we came across a piece that perfectly captures our thesis: newly built AI data centers in Nvidia’s own backyard are sitting idle not for lack of chips, but because the grid can’t power them.

This isn’t merely an anecdote, but a vivid reminder that AI’s frontier is no longer defined by only chips and model sizes, but also by the availability of energy to power these. And as AI continues to increasingly shape our lives and transform the world around us, the biggest upside may belong to the companies supplying the one thing AI can’t scale without: reliable, round-the-clock energy.

That’s it for now. If you are here, please “♡ Like” this piece. It helps us massively!

This work is provided for informational purposes only and should not be construed as legal, business, investment, or tax advice. You should always do your own research

Great analysis. You didn't mention behind the grid power generation as a major source of short-term energy supply. Specifically, utility scale natural gas power plants that are only providing power to data center and that are not necessarily connected to the grid. Many of the players you mentioned I'm sure will benefit from AI data center growth but what are your thoughts about energy producers playing a bigger role in the short term? It seems that the tech players are focusing on natural gas to supply the AI data center power load as the most cost effective and quickest way to scale. This was missing from your analysis and I think is worth a second look.

Yes I like your ideas. Pick and shovel players make sense to me thanks!